|

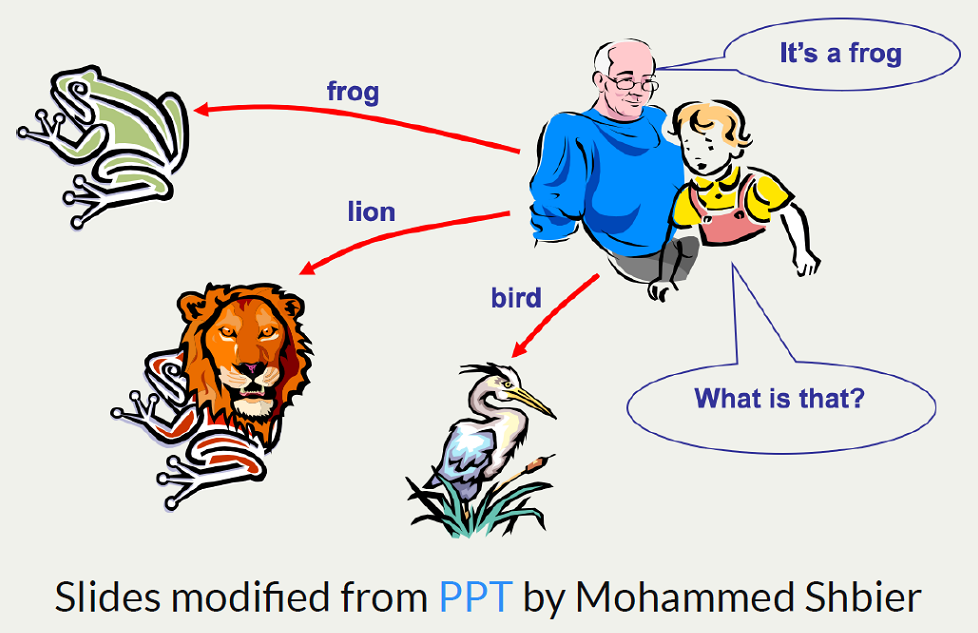

Artificial Intelligence (AI) is a field of computer science

that consists of algorithms, mathematical and statistical models, programming

languages, that aims to mimick human intelligence. Further AI is

implemented using cognitive analytics and computing, machine learning, machine

vision, along with hardware to enable computers, robots and other systems to

operate in an autonomous mode. Humans and animals using natural intelligence

can independently react to any situation and take necessary action to

progress forward to get preferred outcomes.

|

| |

|

Human Intelligence (natural intelligence)

|

|

A baby after birth initially cries when the baby is hungry, has some

discomfort or needs new diapers and so on. Day by day the baby starts

to recognize who the parents

are, initially by how they are held and carried around -

tactile comprehension,

later on by vision -

facial/pattern recognition. The baby

tries to mimic the parents voice by different types of noise and sound

- audio/voice comprehension,

until it can master to communicate meaningful words/sentenses. Since

humans eat and

can react to adverse conditions (foul smell), other

sensory intelligence is acquired by retaining how things smell.

At each level, the newly acquired intelligence gets permanentaly stored

in the

human brain (human learning). This

results in humans taking logical and intelligent actions to one's best

ability for day to day activities. There

has been a lot of research to understand how the human brain works. There

has been analysis of the brain of the well known genius scientist such

as Albert Einstein to understand differences in brain of a normal person

and a genius.

|

| |

|

| |

|

In 1950's, Alan Turing, a British computer scientist wrote about machines

(computers) that could think. He is considered as

father of Artificial Intelligence.

From then on, there has been research to create machines that can think

independently by many research organizations such as

DARPA

and universities around the world. Another famous US computer

scientist, considered as

founder of AI, John McCarthy named the area

of thinking machines as "Artificial intelligence".

|

| |

|

During graduate research on

robotic dexterous hand

several years ago, I

encountered this issue when simulating grasping objects such

as a ball by the dexterous hand using software. The software

simulation of mechanical grasping of was a success. The

dexterous hand was taught (in

machine learning

terms it is similar to

supervised learning

)

to grab hard balls such as a baseball. The software simulation was

mechanically tested using a industrial

robotic gripper with three fingers.

|

| |

|

The big challenge encountered was when

suddenly the object to grasp was switched from baseball to

an egg or a ping-pong ball in a demo robot with same robotic

gripper with fingers. Without AI (intelligent decision

making algorithms) and also due to lack of visual feedback

to the robotic gripper, the egg would get squashed. For

the dexterous hand or robotic gripper to seamlessly

hold objects autonomously without causing damage,

requires AI, machine learning algorithms, software and

hardware embedded with machine vision.

|

| |

|

An animal running in a certain terrain in a forest will react to jump over

an obstacle or turn away using its natural intelligence spontaneously. The

AI provides the tools and technology for a machine to achieve

or mimick such intelligence. Using AI, for a machine to perform such

maneuvers operating autonomously, has to

|

| |

|

1. Compute the speed

|

|

2. Constantly make adjustments to speed based on distance

|

|

3. Avoid obstacles using vision (camera, hardware and software)

|

|

4. Constantly adjust mechanical devices that perform functions of legs or

motion systems based terrain

|

|

5. Think and make spontaneous decisions using built-in AI

|

| |

|

to slow down, or maintain speed, or change path, or stop or jump over

an obstacle. At a high level, this lists the complexity involved

(intelligence, cognitive analytics) that needs to be incorporated

into a robot. The system also has to learn from new situations it

encounters on a constant basis.

|

| |

|

| |

|

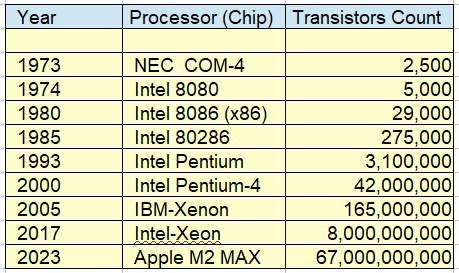

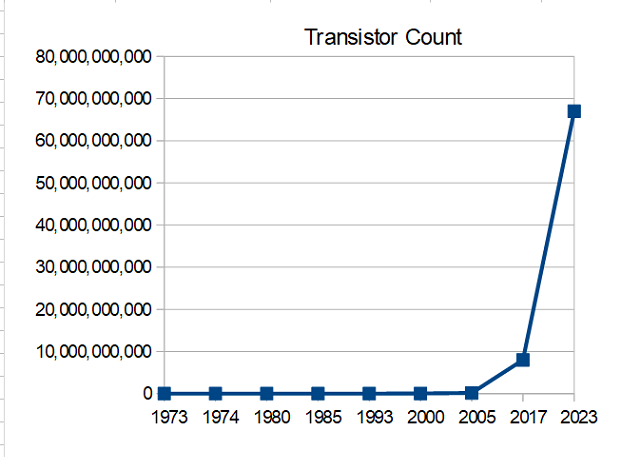

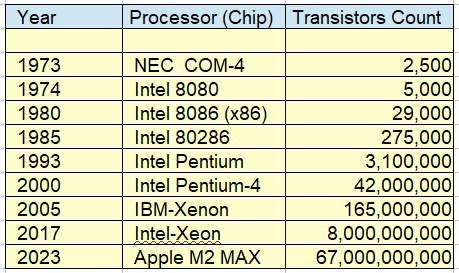

From 1980's to now there has been

enormous progress in the computational power due to advancements in

memory chip architecture

(CPU,

VLSI,

ULSI, etc.)

and technology, which is making AI a

reality in the recent years. The table shows how the number of

transistors started from 2500 in a chip in the 1970s to

67 billion transistors in a single chip in 2023. The IBM's deep

blue supercomputer

was able to achieve the level of intelligence to defeat world chess

champion Gary Kasparov in a couple of games in a tournament. Autonomous

computers and machines continue to evolve with enhancements in AI.

As the computing power and other technologies increases, the

evolution of AI will be exponential in the future.

|

| |

|

| |

|

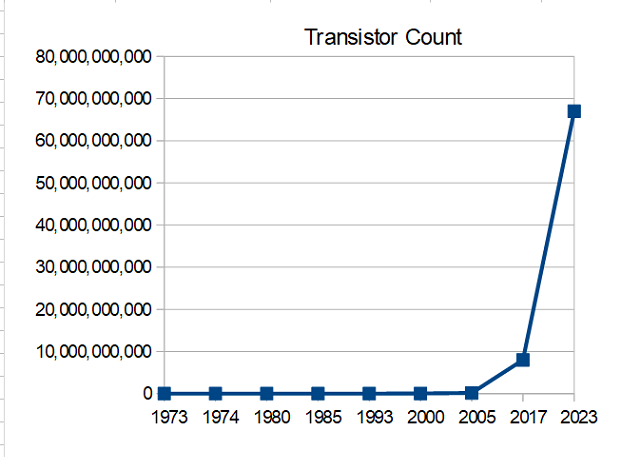

One of the CEO of a AI research company was expressing how

venture capital companies were ignoring funding of

AI and ML in 2010 and around. Going back further to

1990s, AI was considered as a fantasy and recruiters would

advice prospective clients to remove AI from the

resumes, whom they were promoting for job placement in

client companies. The above graph can be a good reason that

the computing power has made AI and ML a thing of the

present day.

|

| |

|

The research that came out of AI and robotics in early

years, was used

in other technologies in stages. Good examples are

machine vision (computer vision) in medical diagnosis,

optimal path between two points - shortest path

algorithms, obstacle avoidance, to name a few.

|

|

Some of the top programming languages used in AI are Python,

Prolog,

Java, C++ and

LISP.

In 1980's there was research about

expert systems, that

would one day be able solve most of human challenges. Now

we have the

ChatGPT

and other AI based tools that use

NLP

and

LLM

algorithms that synthesize the user input, provide

accurate answers, solutions and are

well on the way of achieving highest level of

Artificial Intelligence.

|

| |

|

Weak AI

|

|

Implementing of machine intelligence has been there for

quite some time. Most automobiles have intelligence such

as emergency stopping when a vehicle in front makes a

stop or reduces speed, speen adjustment in adaptive

cruise control, again monitoring the car in front by

use of intelligent sensors,

radar,

hardware and software. There

are the automonous vehicles that drive on their own. Those

that operate with some human interaction are typically

weak or basic AI.

Many IoT

devices, traffic signals and many more, are examples of

embedded devices with intelligence.

|

| |

|

Strong AI

|

|

Those machines that operate in autonomous mode -

"think on their own and take corrective actions without

human intervention" can be considered as

strong or advanced AI.

Further there are autonomous robots,

AGV

that operate

independently without human interaction, avoid

obstacles, plan the best route between a set of points,

make independent decisions, essentially use artifical

intelligence to the highest level and can be

considered as state of the art. There will be a day when

an autonomous robot will evaluate battery life and

replace battery in a safe mode on its own when the battery

life is reaching or below a certain value - say 15%.

|

| |

|

What is Not AI

|

|

Too many application are loosely tagging automatic emails

as "AI-Message" etc. Majority of them are

fixed or pre-designated

application workflow management or automation. There

is no thinking or intelligent assessment aspect. A

good example is, if the we search a court document for

a specific string such as

money laundering,

it just highlights the location of

the string in the document - a standard word

prcessor feature, which has been present in

word processors since the earliest versions.

|

| |

|

Enabling AI

|

|

The above analysis becomes AI enabled, if it can

assess what is searched and provide intelligent

assessment such as what types of crimes were

committed and what it is questionable or can be

excluded - simplify a lawyer's job by use of LLM.

The speed of processing is enhanced, errors,

wrong assessment can avoided or minimized.

|

|

Aerospace

|

|

Air Traffic control, intelligent traffic

management based on

weather/traffic conditions

|

| |

|

Automotive and Related Applications

|

|

Autonomous/driverless vehicles, intelligent traffic and signal

management, autonomous highway speed management based on

weather/traffic conditions

|

| |

|

Finance Applications

|

|

Fraud detection, mortgage data analysis, loan processing,

stock/trade automation, crypto-currency, fintech and so on.

|

| |

|

IT Applications

|

|

Software development, system management, data management,

autonomous process management, cyber security, network

management

|

| |

|

Medical Science

|

|

Perform surgery in an autonomous manner,

with minimum human intervention, basic diagnosis of a person's

health and total automated interaction with all health management

systems, drug development

|

| |

|

Robotics

|

|

Autonomous operations in manufacturing systems, humanoid robots

|